Artificial intelligence (AI)

Article sections

Concepts

Artificial Intelligence (AI) is according to Wikipedia‘s definition, it is a computer or a computer program capable of performing actions considered intelligent. A more precise definition of artificial intelligence is open, because intelligence itself is difficult to define.

Artificial Narrow Intelligence (ANI) is a type of artificial intelligence designed to perform a specific task or set of tasks. It is also known as weak artificial intelligence or adaptive artificial intelligence. All artificial intelligence systems in use today, such as voice assistants Alexa and Siri, Tesla’s driving assistant or ChatGPT, are so-called narrow artificial intelligence applications.

Artificial General Intelligence (AGI) is a type of artificial intelligence capable of learning any intellectual task performed by a person. It is a hypothetical concept that has yet to be achieved in practice, but is often used as a benchmark when evaluating the capabilities of current AI systems. Learning to drive a car, cook, analyze a large amount of data, any work task independently is the goal of all development.

Generative AI is a branch of machine learning that uses algorithms and data to create something new. Let’s think about painting, for example. You have a bunch of paintings – impressionism, cubism, realism, etc. – and you want the AI to create its own work of art. A generative artificial intelligence that has studied these styles is able to create its own work of art, which is new and unique, but which takes influences from the studied styles.

Generative AI works with two main components: a generator and a discriminator. The generator creates new, authentic-feeling results, such as paintings in our example. The discriminator, on the other hand, evaluates these creations and compares them to the original learned models – it tries to distinguish real artworks painted by real artists from images created by artificial intelligence. The generator then tries to improve its creations based on the discriminator’s feedback until it produces something that the discriminator thinks is the right piece.

This process is like an evolving game where the generator and the discriminator compete with each other. This “game” helps AI learn and develop new, creative ideas that can mimic or even surpass original designs. And this is the essence of generative AI: it not only learns to understand data, but also creates new, innovative ideas based on what it has learned.

Artificial intelligence = Support intelligence

We should rather talk about artificial intelligence as support intelligence. It functions excellently as an assistant, ideator, sparring partner, mentor, enhancer, etc. However, it does not remove the subject matter expertise from the user but, on the contrary, emphasizes it, so that we can verify the accuracy of the information produced by artificial intelligence. It also supports independent thinking.

Language models

Language models (LLM) are the ‘engines’ of generative artificial intelligences, which, among other things, can read, summarize, and translate texts (Watch a video about the technology behind language models (YouTube, opens in a new window)). They are capable of processing human-generated text. They predict future characters in a string based on probabilities formed through machine learning, allowing them to create sentences similar to those spoken and written by humans. The task of a language model is thus to generate human-like, fluent text based on the input (prompt) given to it. The most common way to provide input to an AI application is through text typed into a text field.

A grammatically correct and reasonable-sounding text creates an illusion of the correctness of the answer, even though it may be completely distorted. So substantial knowledge of the subject is still needed. Often, checking the accuracy of the text written by artificial intelligence and marking the sources is more laborious than actually producing the text based on the sources.

Artikkeli: Näin ChatGPT syntyi – kukaan ei täysin ymmärrä, miten kielimallit toimivat (Tivi 7.9.2023) – avautuu HAMK:n tunnuksilla (only in Finnish)

The language model is not intelligent

A Language model is just a program that can generate text based on probabilities based on the source material in response to the input it is given. It has no knowledge or understanding of the content, although a fluent and grammatically correct answer may give a vague picture. The responsibility for the correctness of the written information rests with the artificial intelligence user.

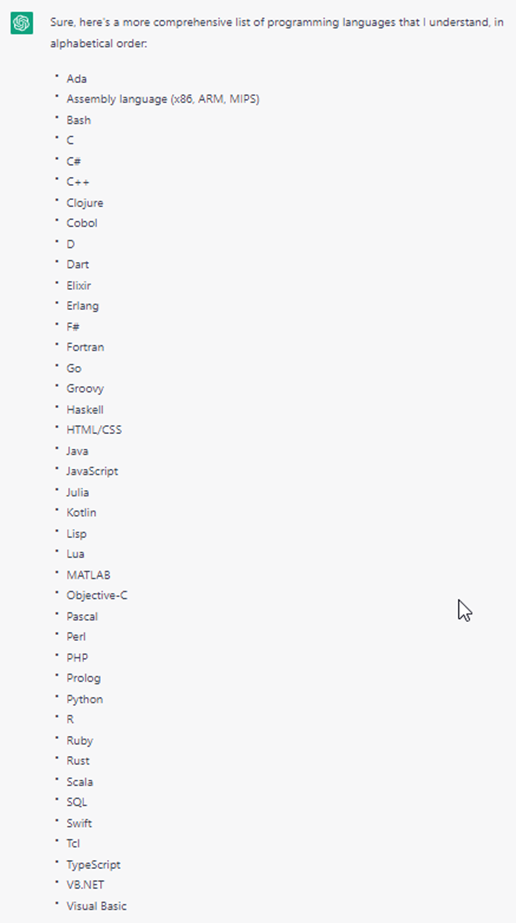

Language models can handle prompt content in dozens of languages, including programming languages, but this varies from application to application.

Language models serve as the foundation for generative artificial intelligence applications. That is, they can create responses according to the desires given through inputs. For example, ChatGPT produces text that, at its best, cannot be distinguished from text written by a human. In addition, image generators like DALL-E 3 can create and modify images based on the text inputs given to them. Each application can be taught its own specific task through machine learning. Machine learning is a subfield of artificial intelligence aimed at enabling an application to perform better based on foundational knowledge and possible user actions. The apparent intelligence results from the vast resource to calculate and compare within its language model to the generalization about subjects it has formed from a massive amount of text material. It cannot create anything new at random, but it combines existing data in entirely new ways and at a speed that humans are not capable of.

Limitations of generative artificial intelligence

Artificial intelligence reflects the source material fed to it. As a limitation to use, you have to remember the possible incorrectness of the information, i.e. hallucinations in the answer. In addition, it may offer biased or damaging information due to the source material used in teaching the language model. This is because the vast majority of the source material used to teach the language model comes from Western countries. The fact that materials from, for example, China or Africa have not been used as source material, weakens the quality of the application and the ability to produce impartial and equal information, taking into account different cultures. The responsibility for the correctness of the written information rests with the artificial intelligence user. Artificial intelligence itself does not care if something is true that it generates for the user, because its task is only to generate text, for example.

GPT-3

GPT-3 (Generative Pre-training Transformer 3) is the third version of the language model developed by OpenAI, released in spring 2020. It is trained with a large amount of text to predict the next word in a sequence of words based on what words are before it (“if-then”- rule parameters) . For example, if the model is given the words “The man listens”, it will predict the next word to be “music”. There are 175 billion of these parameters in this language model.

The limitation is the incorrectness of the information, i.e. hallucination. In addition, it may offer biased or damaging information due to the source material used in the teaching of the language model. In addition, the data in the dataset has not been updated after December 2021.

Version 3.5 of this language model was the engine of the application ChatGPT, which was released in late November 2022.

GPT-4

This latest version of the language model was published on March 14, 2023. The number of parameters used by the language model or the size of the data model have not been disclosed, but it is said to be more creative, understand more complex instructions and be able to solve more complex problems than previous language models. Public data (internet) and licensed third-party libraries have been used as source material for the training of the language model.

It masters and helps with more demanding and complex creative and technical writing tasks, such as composing songs, writing scripts or learning a user’s writing style. In addition, GPT-4 accepts images as input and can create descriptive texts from the content of images, classifications and analyses. The ability to handle larger amounts of text as input has also been improved. GPT-4 can handle more than 25,000 words of text, enabling use cases such as long-form content creation, extended discussions, and document search and analysis.

In addition to efficiency, GPT-4 is more accurate in terms of data accuracy. Accuracy has been increased to 70-80%, depending on the subject. GPT-3.5 got on average 50-60% of the facts correct. But can still provide incorrect or biased information, like its predecessor. Its dataset covers the period until December 2022.

This new language model is currently used in the paid version of ChatGPT, ChatGPT Plus.

GPT-4o (omni), ChatGPT language model (opens in a new browser window)

GPT-4o (“o” stands for “omni”) was released on May 13, 2024, and represents a significant advancement in natural human-computer interaction. The model offers real-time reasoning capabilities through text, audio, and image inputs, enabling natural and versatile means of interaction with the application. GPT-4o communicates with users via text, audio, and image inputs (prompts), creating dialogues and providing answers to new questions, and, if necessary, asking the user for clarifications.

Incorporating fast response times for audio inputs, GPT-4o strives to mimic human conversation, providing a seamless user experience for dialogue. The model demonstrates enhanced performance in image and audio understanding compared to previous versions. By combining text, audio, and image processing into a single input, GPT-4o streamlines its input-output process, thereby improving its efficiency. It matches the performance of GPT-4 Turbo for English text and code, but offers significant improvements in handling other languages, while being significantly faster and 50% more affordable when accessed through the API than GPT-4 Turbo.

OpenAI has conducted extensive evaluations of GPT-4o’s capabilities, including text, audio, and image understanding, and has demonstrated its performance. GPT-4o is now available for free to all users, with premium options offering higher capacity limits. Developers can use GPT-4o via the API for text and image processing, and there are plans to add audio and video capabilities in the future.

GPT-o1, reasoning ability (opens in a new browser window)

On September 12, 2024, OpenAI has introduced a large language model called o1, which is trained to perform complex reasoning using reinforcement learning. o1 is designed to think step by step before responding, using a “chain of thought” process.

Key features of OpenAI o1 preview:

- Improved reasoning: o1 shows better reasoning abilities compared to its predecessor, GPT-4o, in various benchmarks and tests.

- Chain of Thought Reasoning: The model uses a chain of thought process, mimicking human reasoning by breaking down problems into smaller steps, identifying errors, and exploring alternative approaches.

- Human-level performance: o1 achieves impressive results in standardized tests and benchmarks, even surpassing the performance of human experts in certain areas, such as GPQA-diamond assessments, which measure scientific expertise.

- Improved coding abilities: o1 demonstrates strong coding abilities, ranking in the 89th percentile on Codeforces programming challenges and outperforming GPT-4o on coding tasks.

Benefits of chain of thought reasoning:

- Transparency: The chain of thought provides a clear representation of the model’s reasoning process, allowing developers to view and understand its decision-making.

- Security and Congruence: Integrating security practices into the chain of thought has shown promising results in improving model security and congruence with people’s values.

Limitations and considerations:

- Not ideal for all tasks: While o1-preview performs well in reasoning-intensive domains, it may not be suitable for all natural language processing tasks, as found in human preference tests.

- Visibility of the chain of thoughts: Currently, the raw chain of thoughts is not shown directly to users, but instead a summary produced by the model is used. This decision seeks to strike a balance between transparency, user experience, and potential abuse.

Overall, the OpenAI o1 preview represents a significant step forward in AI inference, expanding the boundaries of the model’s capabilities and opening up new possibilities for AI applications in various fields.

Links (open in a new browser window)

- OpenAI

- GPT-4o

- GPT-o1

- ChatGPT

- Google Gemini

- Image generators:

- Arene’s recommendations for universities of applied sciences regarding the use of artificial intelligence

- Tekoälyn pikaopas – Näin käytät tekoälyä tietotyössä (pdf), Lauri Järvilehto (only in Finnish)

- ChatGPT-opas ensiaskeleen ottavalle opettajalle, Otavia opisto (only in Finnish)

- Finnish research project Generation AI

- Using AI responsibly

- Courses on the subject

- The Elements of AI – Basics of artificial intelligence (HY)

- PRACTICAL AI – MITÄ JOKAISEN TULISI TIETÄÄ TEKOÄLYSTÄ (Edukamu) (only in Finnish)

- Tekoäly opetuksen tukena (Jyu) (only in Finnish)

- The Ethics of AI (HY)

- ChatGPT – tee työtä tekoälyn kanssa (Eduhouse)